Google’s 46-camera ‘light field videos’ let you change perspective and peek around corners

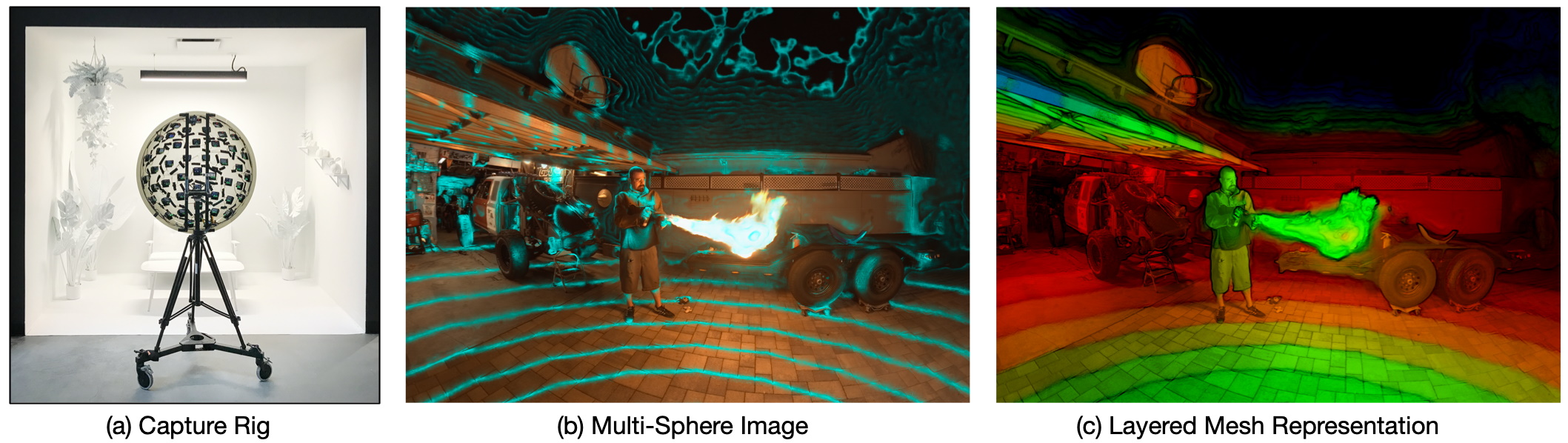

Google is showing off one of the most impressive efforts yet turning traditional photography and video into something more immersive: 3D video that lets the viewer change their perspective and even look around objects in frame. Unfortunately, unless you have 46 spare cameras to sync together, you probably won’t be making these “light field videos” any time soon.

The new technique, due to be presented at SIGGRAPH, uses footage from dozens of cameras shooting simultaneously, forming a sort of giant compound eye. These many perspectives are merged into a single one in which the viewer can move their viewpoint and the scene will react correspondingly in real time.

The effect of high-definition video and freedom of movement gives these light field videos a real sense of reality. Existing VR-enhanced video generally uses fairly ordinary stereoscopic 3D, which doesn’t really allow for a change in viewpoint. And while Facebook’s method of understanding depth in photos and adding perspective to them is clever, it’s far more limited, creating only a small shift in perspective.

In Google’s videos, you can move your head a foot to the side to peek around a corner or see the other side of a given object — the image is photorealistic and full motion but in fact rendered in 3D, so even slight changes to the viewpoint are accurately reflected.

And because the rig is so wide, parts of the scene that are hidden from one perspective are visible from others. When you swing from the far right side to the far left and zoom in, you may find entirely new features — eerily reminiscent of the infamous “enhance” scene from Blade Runner.

It’s probably best experienced in VR, but you can test out a static version of the system at the project’s website, or look at a number of demo light field videos as long as you have Chrome and have experimental web platform features enabled (there are instructions at the site).

The experiment is close cousin to the LED egg used for volumetric capture of human motion we saw late last year. Clearly Google’s AI division is interested in enriching media, though how they’ll do it in a Pixel smartphone rather than a car-sized camera array is anyone’s guess.

from TechCrunch https://ift.tt/2YtGPEh

No comments

Post a Comment